Build a RAG chatbot to answer questions about Python libraries

Interested in asking questions about Python’s latest and greatest libraries? This is the chatbot for you! Fleet Context offers 4M+ high-quality custom embeddings of the top 1000+ Python libraries, while Panel can provide a Chat Interface UI to build a Retrieval-Augmented Generation (RAG) chatbot with Fleet Context.

Why is this chatbot useful? It’s because most language models are not trained on the most up-to-date Python package docs and thus do not have information about the recent Python libraries like llamaindex, LangChain, etc. To be able to answer questions about these libraries, we can retrieve relevant information from Python library docs and generate valid and improved responses based on retrieved information.

Run the app: https://huggingface.co/spaces/ahuang11/panel-fleet

Code: https://huggingface.co/spaces/ahuang11/panel-fleet/tree/main

Access the entire Python universe with Fleet Context

Just a few weeks ago, Fleet AI launched Fleet Context, a CLI and open-source corpus for the python ecosystem with 4M+ high quality embeddings of the top 1000+ Python Libraries. Currently, it offers embeddings for all top 1225 Python libraries and it’s adding more libraries and versions every day. All the embeddings can be downloaded here. Very impressive and useful!

How do we use Fleet Context to ask questions about 1000+ Python packages? After installing the package pip install fleet-context, we can run Fleet Context either in the command line interface or in a Python console:

1. Command line interface

Once we define the OpenAI environment variable export OPENAI_API_KEY=xxx, we can run context in the command line and start ask questions about Python libraries. For example, here I asked “what is HoloViz Panel?”. What I really like about Fleet is that it provides references for us to check.

2. Python console

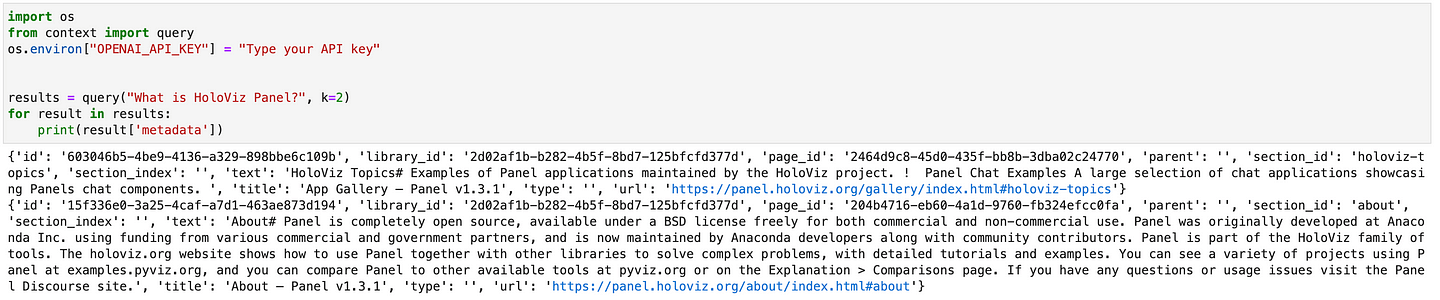

We can query embeddings directly from the provided hosted vector database with the query method from the context library. When we ask a question “What is HoloViz Panel?”, it returned defined number (k=2) of related text chunks from the Panel docs.

Note that the returned results include many metadata such as library_id, page_id, parent, section_id, title, text, type, etc., which are available for us to use and query.

Build a Panel chatbot to ask questions about Python libraries

Let’s build a Panel chatbot UI of the Fleet Context with the following three steps:

0. Import packages

Before we get started, let’s make sure we install the needed packages and import the packages:

from context import query

from openai import AsyncOpenAI

import panel as pn

pn.extension()1. Define the system prompt

Full credit to the Fleet Context team, we took this system prompt and tweaked it a bit from their code:

# taken from fleet context

SYSTEM_PROMPT = """

You are an expert in Python libraries. You carefully provide accurate, factual, thoughtful, nuanced answers, and are brilliant at reasoning. If you think there might not be a correct answer, you say so.

Each token you produce is another opportunity to use computation, therefore you always spend a few sentences explaining background context, assumptions, and step-by-step thinking BEFORE you try to answer a question.

Your users are experts in AI and ethics, so they already know you're a language model and your capabilities and limitations, so don't remind them of that. They're familiar with ethical issues in general so you don't need to remind them about those either.

Your users are also in a CLI environment. You are capable of writing and running code. DO NOT write hypothetical code. ALWAYS write real code that will execute and run end-to-end.

Instructions:

- Be objective, direct. Include literal information from the context, don't add any conclusion or subjective information.

- When writing code, ALWAYS have some sort of output (like a print statement). If you're writing a function, call it at the end. Do not generate the output, because the user can run it themselves.

- ALWAYS cite your sources. Context will be given to you after the text ### Context source_url ### with source_url being the url to the file. For example, ### Context https://example.com/docs/api.html#files ### will have a source_url of https://example.com/docs/api.html#files.

- When you cite your source, please cite it as [num] with `num` starting at 1 and incrementing with each source cited (1, 2, 3, ...). At the bottom, have a newline-separated `num: source_url` at the end of the response. ALWAYS add a new line between sources or else the user won't be able to read it. DO NOT convert links into markdown, EVER! If you do that, the user will not be able to click on the links.

For example:

**Context 1**: https://example.com/docs/api.html#pdfs

I'm a big fan of PDFs.

**Context 2**: https://example.com/docs/api.html#csvs

I'm a big fan of CSVs.

### Prompt ###

What is this person a big fan of?

### Response ###

This person is a big fan of PDFs[1] and CSVs[2].

1: https://example.com/docs/api.html#pdfs

2: https://example.com/docs/api.html#csvs

"""2. Define chat interface

The key component of defining a Panel chat interface is pn.chat.ChatInterface. Specifically, in the callback method, we need to define how the chat bot responds – the answer function.

In this function, we: - Initialize the system prompt - Used the Fleet Context query method to query k=3 relevant text chunks for our given question - We format the retrieved text chunks, URLs, and user message into the required OpenAI message format - We provide the message history into an OpenAI model. - Then we stream the responses asynchronously from OpenAI.

async def answer(contents, user, instance):

# start with system prompt

messages = [{"role": "system", "content": SYSTEM_PROMPT}]

# add context to the user input

context = ""

fleet_responses = query(contents, k=3)

for i, response in enumerate(fleet_responses):

context += (

f"\n\n**Context {i}**: {response['metadata']['url']}\n"

f"{response['metadata']['text']}"

)

instance.send(context, avatar="🛩️", user="Fleet Context", respond=False)

# get history of messages (skipping the intro message)

# and serialize fleet context messages as "user" role

messages.extend(

instance.serialize(role_names={"user": ["user", "Fleet Context"]})[1:]

)

openai_response = await client.chat.completions.create(

model=MODEL, messages=messages, temperature=0.2, stream=True

)

message = ""

async for chunk in openai_response:

token = chunk.choices[0].delta.content

if token:

message += token

yield message

client = AsyncOpenAI()

intro_message = pn.chat.ChatMessage("Ask me anything about Python libraries!", user="System")

chat_interface = pn.chat.ChatInterface(intro_message, callback=answer, callback_user="OpenAI")3. Format everything in a template

Finally we format everything in a template and run panel serve app.py in the command line to get the final app:

template = pn.template.FastListTemplate(

main=[chat_interface],

title="Panel UI of Fleet Context 🛩️"

)

template.servable()Now, you should have a working AI chatbot that can answer questions about Python libraries. If you would like to add more complex RAG features. LlamaIndex has incorporated it into its system. Here is a guide if you would like to experiment Fleet Context with LlamaIndex: Fleet Context Embeddings - Building a Hybrid Search Engine for the Llamaindex Library.

Conclusion

In this blog post, we used Fleet Context and Panel to create a RAG chatbot that has access to the Python universe. We can ask any questions about the top 1000+ Python packages, get answers, and responses from the package docs.

If you are interested in learning more about how to build AI chatbot in Panel, please read our related blog posts:

- Building AI Chatbots with Mistral and Llama2

- Building a Retrieval Augmented Generation Chatbot

- How to Build Your Own Panel AI Chatbots

If you find Panel useful, please consider giving us a star on Github (https://github.com/holoviz/panel). Happy coding!